Data contenerization caught my eye some time ago. And I think software developers, front-ends, even on a junior levels, should at least know about it. It’s the part of software’s backend lifecycle. It’s like being a car mechanic internship knowing that drive shaft spins, but not knowing how it works and what is does.

Seperate? Put it to jail!

So imagine you have a drawer full of pair of socks – each pair is in a different colour. But they’re scaterred and mixed. Now you want to change something – get rid of yellow socks or change all yellow socks to blue. But there are so many of them. It takes time and resources to find them, sort etc. How about if you would take all socks out, find their pairs by colours, then put into separate, labelled boxes. That would be an overkill in real life, but in backend engineering it makes a lot of sense. Separation of data simplifies data manging process and what’s more – segmentation is safer. Simplifying the whole topic: contenerized apps are like those sock boxes: separated and labelled.

You might thinkit’s a new concept, that everybody in IT is hyped about since few years just because it’s new. It’s actually not. Jailing procesess and containers (to be more precise – chroot operation) were introduced in unix operating systems (e.g. FreeBSD) many years ago. In general chroot operation changes the root directory of the process to make it seperate from the whole system the way it creates some kind of safe enviroment, which in case of an error or attack – won’t influence the whole system. The chroot operation and it’s use changed and was modified throught the years.

There is more than colors or socks

There’s now so many data that managing it without sorting solutions would be really difficult. But hey, stop! We have still virtual machines where we can do whatever we want on whatever operating system we need. So why there’s so many hype in recent times about something that is similar to already well known VM’s?

Some of the biggest advantages of contanerization over virtual machines are efficiency and scalability. Containers don’t use seperate operating systems like VM’s – so they’re lighter. They use only libraries and dependencies needed to run a certain app. Moreover, app layers in a container can be shared with other containers (e.g. container with reverse proxy can serve other apps in different containers to access them from outside network). Scalability – because you can easily add instances to handle more load. You can use contenerization software for private, small load usage and in a huge scale operations using as small resources as possible.

What software I use? Docker!

It’s one of the most popular software for this purpose. It’s usable not only on servers (from small home labs to big data centers) but also on home computers. Installation is possible on most of OS (Linux distributions, osX, Windows). It’s even integrated with programming software like VS Code.

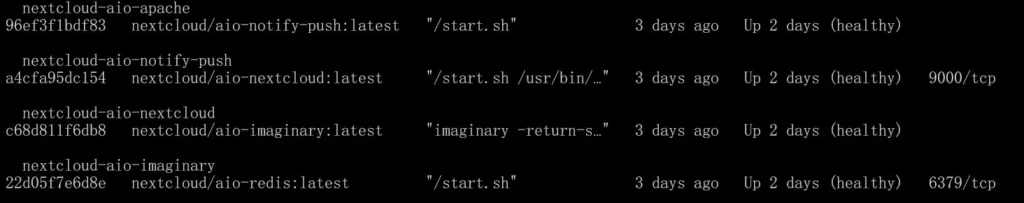

My home server works on a lite version of Debian Bookworm, and has Docker installed. I have contenerized few apps and I still do a lot of experiments with them. Mostly in a security level, like connecting simple database with a main app through virtual, internal LAN networks etc. I run also reverse proxy server which serves my cloud where I’m testing different solutions. I use PI-Hole which for examle through DNS changes eliminates ad’s in my home network.

Conclusion

Knowledge about data contenerization is a great addon and later a must during learning process of software development (even just frontend!) and IT. Why?

It:

- simplifies software management,

- it has a great community and documentation

- you can experiment with it on your local machine, small homelab or in the cloud

- it versatile – it can be used both in a small and huge scale

- in the future you will work with containers

- it’s nice to know something new 🙂